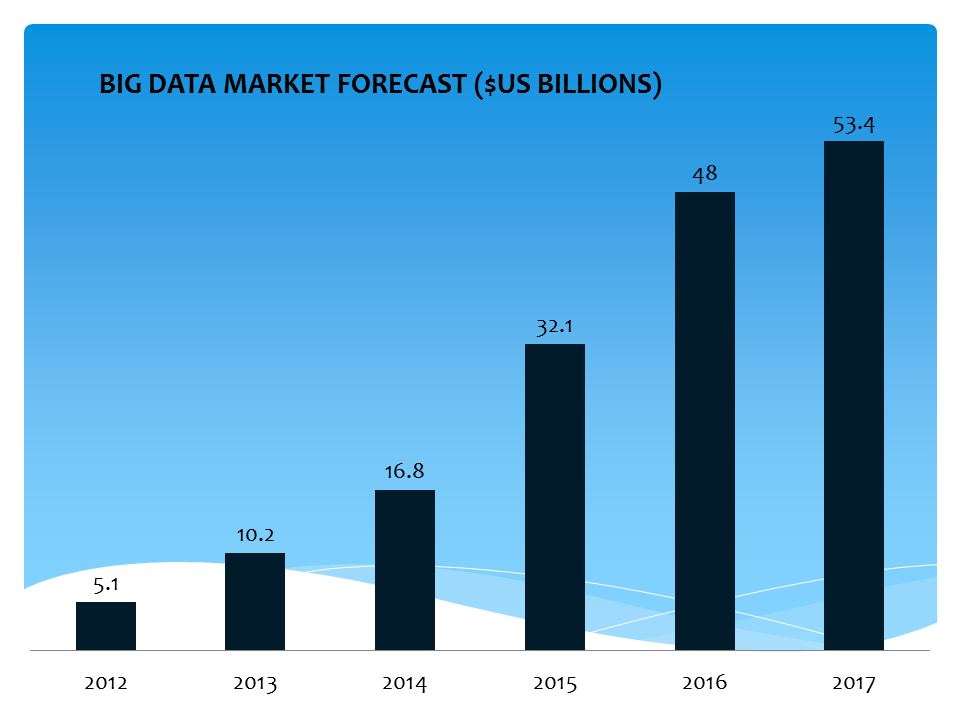

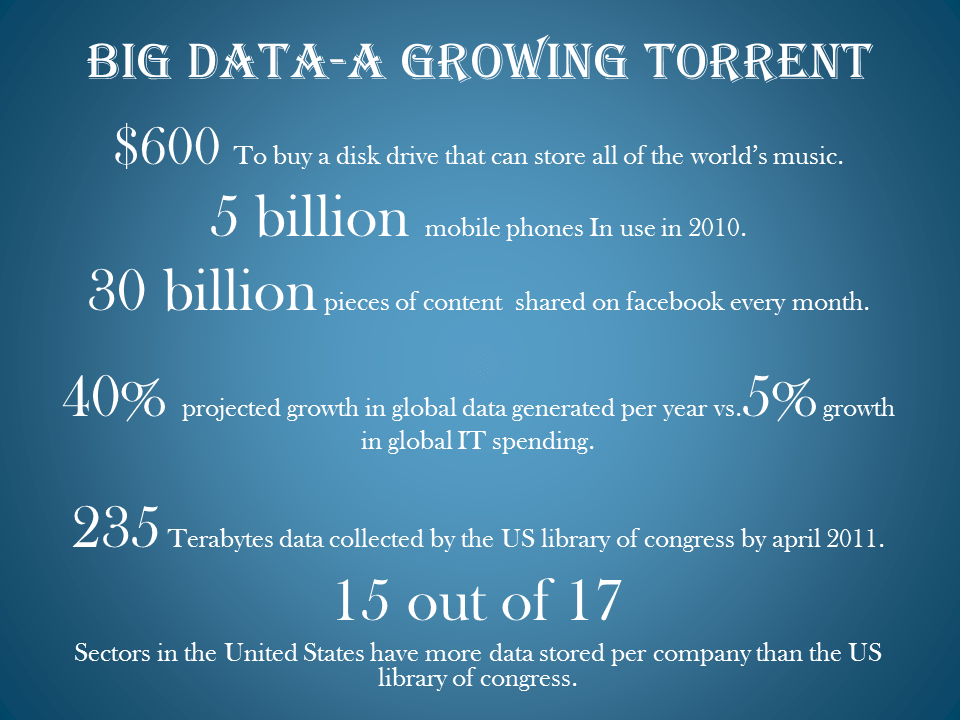

Hello friends, today we are going to have some healthy knowledge on one of the fastest emerging technology of 21st century i.e Big Data everyone seems to be talking about it, but what is big data really? How is it changing the way researchers at companies, non-profits, governments, institutions, and other organizations are learning about the world around them? Where is this data coming from, how is it being processed, and how are the results being used? And why is open source so important to answer these questions? Ever thought about that?

Well ‘Big Data’ describes innovative techniques and technologies to capture, store, distribute, manage and analyze petabyte or larger-sized datasets with high-velocity and different structures. Big data can be structured, unstructured, semi-structured, resulting in the incapability of conventional data management methods.

Data is generated from various different sources and can arrive in the system at various rates. In order to process these large amounts of data in an inexpensive and efficient way, parallelism is used. Data is a data whose scale, diversity and complexity require new architecture, techniques, algorithms, and analytics to manage it and extract value and hidden knowledge from it.

What is Big Data?

Big data is a term that refers to data sets or combinations of data sets whose size (volume), complexity (variability), and rate of growth (velocity) make them difficult to be captured, managed, processed or analyzed by conventional technologies and tools, such as relational databases and desktop statistics or visualization packages, within the time necessary to make them useful.

While the size used to determine whether a particular data set is considered big data is not firmly defined and continues to change over time, most analysts and practitioners currently refer to data sets from 30-50 terabytes(1012 or 1000 gigabytes per terabyte) to multiple petabytes (1015 or 1000 terabytes per petabyte) as big data.

Three Characteristics of Big Data

Big Data Volume: Volume refers to the amount of data. The volume of data stored in enterprise repositories has grown from megabytes and gigabytes to petabytes.

Big Data Velocity: Velocity refers to the speed of data processing. For time-sensitive processes such as catching fraud, big data must be used as it streams into your enterprise in order to maximize its value.

Big Data Variety: Different types of data and sources of data. Data variety exploded from structured and legacy data stored in enterprise repositories to unstructured, semi-structured, audio, video, XML etc.

So, guys for today this was enough about big data and its types, hope you all got little bit of idea about it. Next. we’ll share with you one of the leading tools which is used to analyze big data.

Till then keep reading, sharing and do give feedback. If you have any query regarding today’s blog feel free to post them below in comments.