Apache Hadoop is an open source software platform for distributed storage and distributed processing of very large data sets on computer clusters built from commodity hardware. Hadoop services provide for data storage, data processing, data access, data governance, security, and operations.

History

The genesis of Hadoop came from the Google File System paper that was published in October 2003. This paper spawned another research paper from Google – MapReduce: Simplified Data Processing on Large Clusters. Development started in the Apache Nutch project, but was moved to the new Hadoop subproject in January 2006. The first committer added to the Hadoop project was Owen O’Malley in March 2006. Hadoop 0.1.0 was released in April 2006 and continues to be evolved by the many contributors to the Apache Hadoop project. Hadoop was named after one of the founder’s toy elephant.

In 2011, Rob Bearden partnered with Yahoo! to establish Hortonworks with 24 engineers from the original Hadoop team including founders Alan Gates, Arun Murthy, Devaraj Das, Mahadev Konar, Owen O’Malley, Sanjay Radia, and Suresh Srinivas.

Why is Hadoop important?

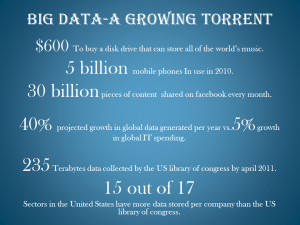

- Ability to store and process huge amounts of any kind of data, quickly. With data volumes and varieties constantly increasing, especially from social media and the Internet of Things (IoT), that’s a key consideration.

- Computing power. Hadoop’s distributed computing model processes big data fast. The more computing nodes you use, the more processing power you have.

- Fault tolerance. Data and application processing are protected against hardware failure. If a node goes down, jobs are automatically redirected to other nodes to make sure the distributed computing does not fail. Multiple copies of all data are stored automatically.

- Flexibility. Unlike traditional relational databases, you don’t have to preprocess data before storing it. You can store as much data as you want and decide how to use it later. That includes unstructured data like text, images and videos.

- Low cost. The open-source framework is free and uses commodity hardware to store large quantities of data.

- Scalability. You can easily grow your system to handle more data simply by adding nodes. Little administration is required.

What are the challenges of using Hadoop?

MapReduce programming is not a good match for all problems. It’s good for simple information requests and problems that can be divided into independent units, but it’s not efficient for iterative and interactive analytic tasks. MapReduce is file-intensive. Because the nodes don’t intercommunicate except through sorts and shuffles, iterative algorithms require multiple map-shuffle/sort-reduce phases to complete. This creates multiple files between MapReduce phases and is inefficient for advanced analytic computing.

There’s a widely acknowledged talent gap. It can be difficult to find entry-level programmers who have sufficient Java skills to be productive with MapReduce. That’s one reason distribution providers are racing to put relational (SQL) technology on top of Hadoop. It is much easier to find programmers with SQL skills than MapReduce skills. And, Hadoop administration seems part art and part science, requiring low-level knowledge of operating systems, hardware and Hadoop kernel settings.

Data security. Another challenge centers around the fragmented data security issues, though new tools and technologies are surfacing. The Kerberos authentication protocol is a great step toward making Hadoop environments secure.

Full-fledged data management and governance. Hadoop does not have easy-to-use, full-feature tools for data management, data cleansing, governance and metadata. Especially lacking are tools for data quality and standardization.

Advantages and Disadvantages Of Hadoop

Advantages

1. Distribute data and computation.The computation local to data prevents the network overload.

2. Tasks are independent The task are independent so,

- We can easy to handle partial failure. Here the entire nodes can fail and restart.

- It avoids crawling horrors of failure and tolerant synchronous distributed systems.

- Speculative execution to work around stragglers.

- Linear scaling in the ideal case.It used to design for cheap, commodity hardware.

3. Simple programming model.The end-user programmer only writes map-reduce tasks.

4. Flat scalability:- This is the one advantages of using Hadoop in contrast to other distributed systems is its flat scalability curve. Executing Hadoop on a limited amount of data on a small number of nodes may not demonstrate particularly stellar performance as the overhead involved in starting Hadoop programs is relatively high. Other parallel/distributed programming paradigms such as MPI (Message Passing Interface) may perform much better on two, four, or perhaps a dozen machines. Though the effort of coordinating work among a small number of machines may be better-performed by such systems the price paid in performance and engineering effort (when adding more hardware as a result of increasing data volumes) increases non-linearly.

A program written in distributed frameworks other than Hadoop may require large amounts of refactoring when scaling from ten to one hundred or one thousand machines. This may involve having the program be rewritten several times; fundamental elements of its design may also put an upper bound on the scale to which the application can grow.

Hadoop, however, is specifically designed to have a very flat scalability curve. After a Hadoop program is written and functioning on ten nodes, very little–if any–work is required for that same program to run on a much larger amount of hardware. Orders of magnitude of growth can be managed with little re-work required for your applications. The underlying Hadoop platform will manage the data and hardware resources and provide dependable performance growth proportionate to the number of machines available.

5. HDFS store large amount of information

6. HDFS is simple and robust coherency model

7. That is it should store data reliably.

8. HDFS is scalable and fast access to this information and it also possible to serve s large number of clients by simply adding more machines to the cluster.

9. HDFS should integrate well with Hadoop MapReduce, allowing data to be read and computed upon locally when possible.

10. HDFS provide streaming read performance.

11. Data will be written to the HDFS once and then read several times.

12. The overhead of cashing is helps the data should simply be re-read from HDFS source.

13. Fault tolerance by detecting faults and applying quick, automatic recovery

14. Processing logic close to the data, rather than the data close to the processing logic

15. Portability across heterogeneous commodity hardware and operating systems

16. Economy by distributing data and processing across clusters of commodity personal computers

17. Efficiency by distributing data and logic to process it in parallel on nodes where data is located

18. Reliability by automatically maintaining multiple copies of data and automatically redeploying processing logic in the event of failures

19. HDFS is a block structured file system: – Each file is broken into blocks of a fixed size and these blocks are stored across a cluster of one or more machines with data storage capacity

20. Ability to write MapReduce programs in Java, a language which even many noncomputer scientists can learn with sufficient capability to meet powerful data-processing needs

21. Ability to rapidly process large amounts of data in parallel

22. Can be deployed on large clusters of cheap commodity hardware as opposed to expensive, specialized parallel-processing hardware

23. Can be offered as an on-demand service, for example as part of Amazon’s EC2 cluster computing service

Disadvantages of Hadoop

1. Rough manner:- Hadoop Map-reduce and HDFS are rough in manner. Because the software under active development.

2. Programming model is very restrictive:- Lack of central data can be preventive.

3. Joins of multiple datasets are tricky and slow:- No indices! Often entire dataset gets copied in the process.

4. Cluster management is hard:- In the cluster, operations like debugging, distributing software, collection logs etc are too hard.

5. Still single master which requires care and may limit scaling

6. Managing job flow isn’t trivial when intermediate data should be kept

7. Optimal configuration of nodes not obvious. Eg: – #mappers, #reducers, mem.limits